Balancing Innovation and Security

Things to think about!

Neil Reed

3/7/20242 min read

Large Language Models (LLMs) have emerged as powerful tools, revolutionising natural language understanding, content generation, and problem-solving. However, their widespread adoption has raised valid security concerns. Many organizations perceive LLMs as “plug and play” solutions, often overlooking the essential security controls necessary to mitigate risks effectively.

The Challenge: Balancing Innovation and Security

While LLMs offer unprecedented capabilities, their deployment must align with robust security practices. Here are some key considerations:

1. Risk Awareness

Acknowledge that even with stringent controls and precautions, residual risk remains. The dynamic landscape of cybersecurity demands constant vigilance.

2. Holistic View

The Centre For GenAIOps CIC and I have developed a high-level overview of common LLM scenarios. This framework aims to guide organizations in making informed decisions while leveraging LLMs.

3. Risk-Reduction Measures

Implementing security controls is essential. However, it’s crucial to strike a balance between innovation and risk mitigation. LLMs should undergo rigorous testing, validation, and alignment with organisational security policies.

4. Cat-and-Mouse Game:

Recognise that security is an ongoing process. As we fortify defences, adversaries adapt. The interplay between detection and prevention is akin to a cat-and-mouse game.

In summary, while LLMs empower us, they also necessitate responsible stewardship. By adopting a proactive security mindset, we can harness the potential of LLMs while safeguarding our digital assets.

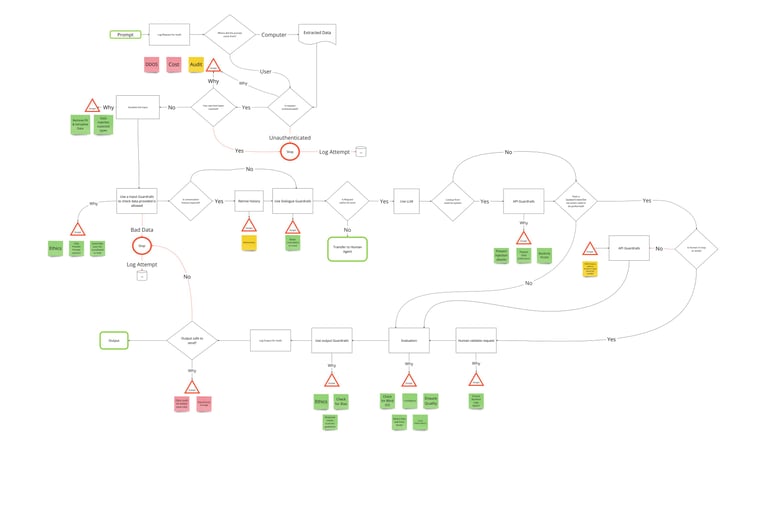

Illustrating the Flow: LLM Usage and Risk Controls

The flow chart above illustrates a typical LLM usage flow and identifies critical points where controls and best practices can be introduced to reduce risk. Let’s explore these stages:

Input Validation and Sanitization

• Prompt Sanitization: Scrutinize user-provided prompts rigorously. Remove or neutralize potentially harmful characters, escape sequences, or special tokens.

• Whitelisting: Define a set of allowed prompt structures. Reject any prompts that deviate from these predefined patterns.

• Regular Expressions: Utilise regex patterns to validate prompts, ensuring adherence to expected formats.

Contextual Boundaries

• Contextual Segmentation: Break prompts into meaningful segments. Separate user instructions from system-generated content.

• Contextual Constraints: Limit the scope of generated responses based on context. Avoid overreliance on user-provided prompts.

Guardrails for Task Steering

• Task-Specific Prompts: Encourage users to provide clear, task-specific prompts. Avoid generic or ambiguous instructions.

• Fallback Mechanism: If the LLM encounters an unfamiliar prompt, gracefully steer the conversation back to a known context or provide a default response.

Monitoring and Anomaly Detection

• Behavioural Analysis: Continuously monitor LLM interactions. Detect unusual patterns, unexpected outputs, or deviations from expected behaviour.

• Alerting Mechanisms: Set up alerts for suspicious prompts or responses. Collaborate with security teams to promptly investigate anomalies.

Human-in-the-Loop Validation

• Validate actions that result in system updates, creations, or deletions. Ensure alignment with the system’s intended purpose.

Contextual Alignment

• Ensure that user requests align with the system’s design context. Avoid unintended or out-of-scope actions.

User Authentication

• Implement secure user authentication methods, such as multi-factor authentication (MFA) and OAuth.

• Validate user credentials against a trusted identity provider.

Rate Limiting and Throttling

• Implement rate limiting to prevent abuse or excessive requests.

• Throttle API calls to prevent resource exhaustion.

Remember, LLMs are powerful tools, but responsible usage is paramount. By integrating security into every stage of LLM adoption, we can harness their potential while safeguarding our digital assets.