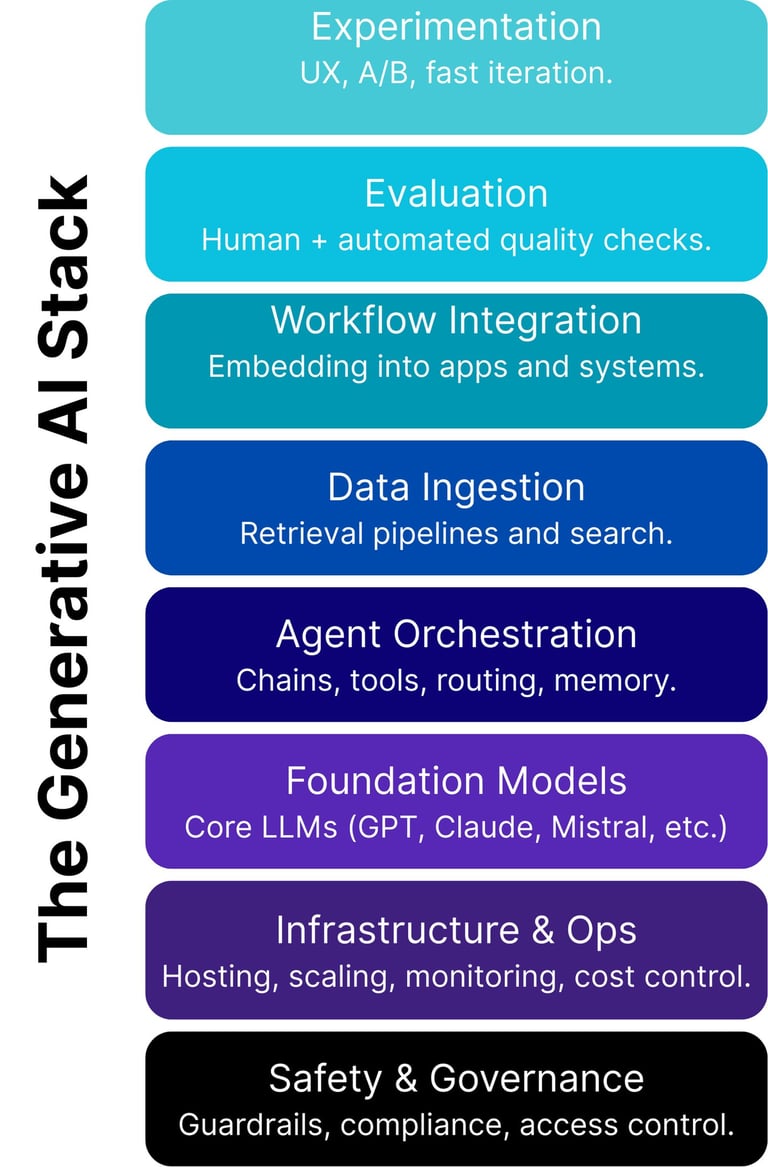

The Generative AI Stack

The Generative AI Stack is the technical foundation you need to effectively implement generative AI at scale. It consists of interconnected layers each critical in building, deploying, and managing generative AI applications reliably and sustainably.

Safety and Governance

The Safety and Governance layer ensures your generative AI systems operate responsibly, safely, and compliantly. Begin by clearly defining a governance strategy that reflects your priorities, ethics, and regulatory requirements. Determine precisely what's important, such as data privacy, security, accuracy, fairness, and transparency. Next, implement technical guardrails such as content moderation, prompt validation, and output filtering to enforce these standards automatically. Finally, establish robust reporting and compliance procedures, including regular audits, automated logging, and compliance monitoring, to maintain transparency and accountability.

Infrastructure and Operations

Your infrastructure and operational practices underpin the reliability and performance of your generative AI solutions. This includes decisions around compute resources, such as managed services like OpenAI or AWS Bedrock, self-hosted solutions like Hugging Face TGI or Kubernetes-based deployments, or hybrid strategies involving multiple providers. It's crucial to design for scalability and availability through autoscaling, load balancing, and redundancy to meet changing demands reliably. Observability and monitoring tools must track latency, throughput, costs, and usage patterns, enabling you to proactively identify bottlenecks or risks. Robust operational practices, including disaster recovery, failover strategies, and cost optimisation, ensure your generative AI systems remain reliable and economically sustainable over the long term.

Foundation Models (LLMs)

Foundation models, typically large language models (LLMs) such as GPT-4, Claude, or open-source alternatives like Mistral, provide the core generative capabilities for your systems. Selecting the right model involves balancing factors like output quality, reasoning capabilities, and suitability for your specific use cases. Performance considerations, including inference speed, scalability, latency, and the capacity to handle concurrent requests significantly impact the user experience and operational efficiency. Additionally, you'll need to weigh the importance of data location and privacy, considering geographical and regulatory compliance, along with ownership and licensing considerations around proprietary versus open-source models. Lastly, ensure you have clarity on costs related to licensing, hosting, and inference, as these will directly influence your budget and scalability.

Agent Orchestration

Agent orchestration involves structuring how your generative AI models interact with each other, your internal tools, and external services. Effective orchestration requires thoughtfully designing agent architectures, such as using LangChain, AutoGen, Great Wave AI, or Copilot Studio to handle various tasks, communication protocols, and workflows. Standardisation is essential for creating reusable agents and predictable interactions, simplifying maintenance and scaling. Decomposing complex tasks into simpler sub-tasks that specialised agents handle individually improves reliability, clarity, and performance. By adopting structured orchestration patterns, you enhance the flexibility, maintainability, and operational effectiveness of your entire generative AI system.

Data Ingestion

Effective Data Ingestion is essential to ensure your generative AI models generate accurate, contextually relevant responses. The primary recommended method for data ingestion is Retrieval-Augmented Generation (RAG). RAG integrates your own organizational data, such as documentation, support articles, or product information, into the AI’s responses. This process involves identifying relevant data sources, preparing and cleaning data, and converting it into embedded vectors stored in specialised vector databases (like Pinecone, Weaviate, or FAISS). Efficient retrieval methods ensure your models are fed relevant, timely information, reducing hallucinations and increasing accuracy. Regular updates, maintenance, and quality monitoring ensure your data remains fresh, reliable, and valuable for your generative AI applications.

Workflow Integration

The Workflow Integration layer is where your generative AI capabilities become directly useful to users by embedding them seamlessly into your existing tools, applications, and business processes. This integration might occur within collaboration tools (Slack, Microsoft Teams), CRMs (Salesforce, Zendesk), internal dashboards, or bespoke customer-facing applications. Effective integration prioritizes excellent user experience, intuitive interfaces, and streamlined workflows. It also incorporates built-in feedback loops for continuous improvement. Successful workflow integration transforms powerful AI capabilities into practical tools, accelerating adoption, productivity, and measurable business outcomes.

Experimentation and Evaluation

Experimentation and Evaluation are critical to maximising the impact of your generative AI initiatives. This layer enables rapid, informed iteration by systematically testing prompts, comparing model outputs, and validating new ideas through structured A/B testing. Employ automated evaluation methods, such as hallucination detection tools and performance metrics (e.g., relevance, accuracy, latency), alongside human assessments for qualitative insights. By continuously experimenting, learning, and iterating, you will steadily optimise system performance, user satisfaction, and alignment with business objectives, significantly accelerating your return on investment.

Bringing the Stack Together

Each component of the Generative AI Stack; Safety and Governance, Infrastructure and Operations, Foundation Models, Agent Orchestration, Data Ingestion, Workflow Integration, and Experimentation and Evaluation interacts closely to form a cohesive and reliable generative AI capability. By understanding and carefully designing each layer, your organization can confidently build, deploy, and scale generative AI solutions that drive tangible and sustained value.