Generative AI Production Deployment - Where We Stand Now

Where We Stand Now

Mahsa Paknezhad

1/20/202611 min read

The Adoption Challenge

Despite heavy investment in AI, production deployment remains elusive. An AWS study of over 900 organizations across multiple industries revealed a striking gap: while half expected to have at least 10 AI agents in production by 2025, less than 7% currently have one use case fully deployed [1].

At the Centre for GenAIOps, we've identified that this deployment gap stems from organizations focusing solely on technology while neglecting governance and methodology. Our research shows that successful deployment requires three interconnected elements: the Generative AI Manifesto with clear principles guiding responsible development (the "why"), the GenAIOps Operating Model with structured processes that manage risk while delivering value (the "how"), and the Generative AI Stack providing robust technical architecture (the "what"). When organizations neglect these elements, they encounter predictable barriers that prevent production deployment.

These barriers fall into two major categories that directly reflect gaps in the GenAIOps Framework. Technical challenges loom large, 64% of organizations struggle with integration complexity, 43% battle poor data quality, and many face infrastructure scalability limitations [2][3]. On the organizational front, half of companies report skills gaps, nearly 40% encounter change management hurdles, and only about a quarter properly secure their AI projects.

The economic picture looks even bleaker. Fewer than 1% of executives report significant ROI exceeding 20%, and just 23% can accurately measure their return on investment [4][12]. This disconnect has created what analysts call the "GenAI Divide"—though 80% of companies experiment with generative AI tools, a mere 5% generate substantial business value [5].

Throughout 2024-2025, major AI companies responded by publishing comprehensive frameworks for moving generative AI from prototype to production-ready solutions [6][7]. Success stories do exist: Writer achieved 85% faster content reviews with $10 million in documented savings; ASAPP delivered 91% first-call resolution for complex service issues while cutting chat interaction costs by 77%; and Jabil slashed deployment times by two-thirds while reducing data processing times by 74% [8][9][10].

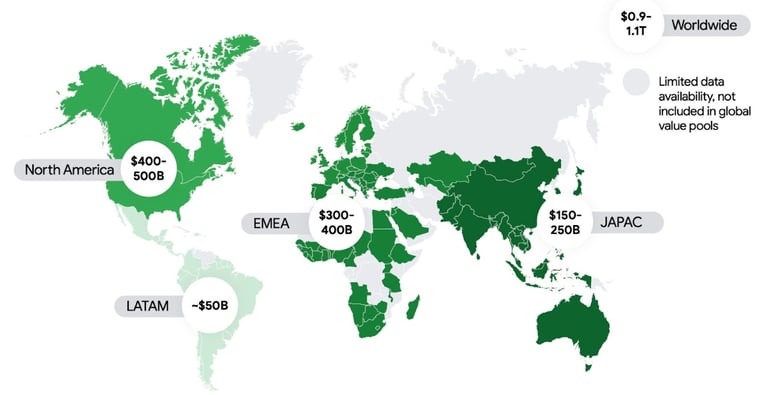

This blog explores how major AI providers are easing the path to production, addressing an urgent need reflected in market projections. The global agentic AI service market, systems that autonomously plan and execute multi-step tasks, could reach $1 trillion by 2035-2040 [11], with exact figures varying based on adoption rates and market definitions. Fig. 1 shows Google Cloud's estimated global System Integrators (SIs) value pool for agentic AI, generative AI systems that can autonomously plan and execute multi-step tasks, by 2035-2040. To understand how the industry is addressing these production challenges, we examine how seven major providers—AWS, Google Cloud, Microsoft, IBM, Oracle, Meta, and NVIDIA—are building frameworks and tools to bridge the deployment gap.

Figure 1: Estimated global systems integration (SI) services value pool for agentic AI, 2035-2040 (Source: Google Cloud, 2025)

AWS Generative AI Production Framework

AWS has built a comprehensive ecosystem addressing enterprise AI needs through three pillars: Amazon Bedrock for foundation model access, SageMaker AI for development, and Bedrock AgentCore for production solutions. More than 100,000 organizations worldwide now use Amazon Bedrock, accessing hundreds of foundation models through a unified API with enterprise-grade security [13].

The platform offers unique capabilities like Amazon Bedrock Guardrails, which blocks up to 88% of harmful content [14] while using Automated Reasoning to identify correct model responses with up to 99% accuracy. SageMaker's integration of JumpStart and HyperPod creates a unified ecosystem that reduces training time by up to 40% while scaling across thousands of AI accelerators.

AWS guides organizations through production deployment with its Well-Architected Generative AI Lens, featuring architectural best practices and a seven-phase lifecycle from scoping to continuous improvement [15]. Their Generative AI Operational Excellence Framework (GLOE) further supports organizations throughout application development and operations. GLOE aligns with the seven-layer Generative AI Stack we've defined at the Centre for GenAIOps, with Safety and Governance as the foundation rather than an afterthought.

Google Cloud's Generative AI Deployment Approach

Vertex AI sets itself apart with native multimodal capabilities and advanced reasoning features. The platform provides models with dynamic thinking capabilities and configurable thinking levels to balance response quality against latency and cost [16]. Unlike competitors who rely on separate services, Google's end-to-end MLOps infrastructure offers integrated pipelines, feature store, and deployment capabilities under a unified architecture, a design that handles text, images, video, audio, and code through a single endpoint.

Google's Vertex AI Agent Builder delivers comprehensive platform capabilities with impressive results, 88% of early adopters report positive ROI [17]. Their governance process spans model development through post-launch monitoring, with risk assessment via research, external expert input, and red teaming. In November 2025, Google released comprehensive agentic AI framework guidelines establishing a five-level taxonomy for autonomous systems, complemented by a technical guide for moving agents from prototype to production [18].

Microsoft Azure OpenAI Service Framework

Microsoft maintains a strong competitive position through its strategic partnership with OpenAI and deep integration with the Microsoft enterprise ecosystem. Azure OpenAI Service offers enterprise-grade access to OpenAI's latest models with support for multiple APIs, reasoning summary, multimodality, and full tools integration [19].

The company's competitive advantage stems from its unified ecosystem combining Foundry (its enterprise data platform for AI applications), Machine Learning, and Content Safety services. This tight coupling with OpenAI's models has helped secure adoption in 70% of Fortune 500 companies [20], while the 2025 State of Cloud Security Report indicates 30% of organizations now use Azure OpenAI.

Microsoft's 2025 strategy emphasizes evolution from generative to agentic AI, introducing multi-agent support and AI-assisted operations within Azure's secure framework [21]. Microsoft Foundry now unifies the entire AI app lifecycle with platform-wide tools and governance for enterprise deployment, supported by Microsoft's Responsible AI Standard that focuses on identifying, measuring, and mitigating potential harms.

IBM watsonx.ai Developer Studio

IBM positions watsonx.ai as an enterprise-ready platform with comprehensive governance and hybrid cloud deployment options. The system integrates three components: watsonx.ai for model development, watsonx.data for data management, and watsonx.governance for compliance—a comprehensive approach that earned IBM leadership recognition in Gartner's 2025 Magic Quadrant for Data Science and Machine Learning Platforms [22].

IBM differentiates through its model lifecycle management, inferencing, and access policy controls [23]. The platform enables deployment of generative AI models as REST services via a common API while maintaining strict governance. Recent innovations include pre-built domain agents specialized for HR, sales, and procurement functions, with sophisticated multi-agent orchestration capabilities.

The platform's hybrid approach and FedRAMP authorization on AWS GovCloud demonstrates IBM's focus on regulated industries and government customers [24]. FedRAMP certification ensures cloud providers meet strict security requirements, making IBM particularly attractive for organizations in highly regulated sectors. Their governance-first architecture exemplifies what we advocate as embedding compliance and risk assessment from the beginning rather than adding it later.

Oracle Cloud Infrastructure Generative AI Framework

Oracle has woven generative AI throughout its technology stack. Oracle Cloud Infrastructure (OCI) Generative AI Service provides access to foundation models for enterprise use cases and enables fine-tuning with custom datasets. Oracle Fusion Cloud Applications now feature AI-powered summarization, while Database 23ai incorporates AI Vector Search and natural language SQL queries. Their APEX 24.2 development platform offers enhanced AI assistant capabilities supporting enterprise use cases from customer operations to risk management [25][26].

Oracle's strategy centers on the AI Factory, a comprehensive customer and partner support service accelerating AI adoption across Oracle Cloud, Database, and Fusion Applications. In 2025, they unveiled the Fusion Applications AI Agent Marketplace—an online store where customers can buy and deploy AI agents directly within Oracle's cloud applications [27].

The company has expanded partnerships with AWS and Azure, creating Oracle Database integrations that allow customers to combine Oracle's AI Database with advanced services from other cloud providers. Oracle deepened its collaboration with NVIDIA in 2025, integrating over 160 AI tools and 100 NVIDIA NIM microservices into Oracle Cloud Infrastructure [28]. A $20 billion cloud-computing deal with Meta further demonstrates the massive scale of enterprise AI infrastructure requirements.

Meta's Generative AI Technology Stack and Infrastructure

Throughout 2024-2025, Meta contributed significantly to generative AI production deployment frameworks, with PyTorch-native solutions enabling scalable deployment of large language models [29]. The company's research division, Meta FAIR, has pushed boundaries in multimodal AI by creating the Chameleon family of models. Unlike traditional systems that process only one type of input, Chameleon can handle text and images simultaneously—much like how humans naturally process information [30].

This multimodal approach extends across Meta's broader AI portfolio. The company has built SAM 3D for reconstructing three-dimensional objects from single images, Movie Gen for creating videos, and the Generative Ads Model (GEM), which stands as the industry's largest foundation model for recommendation systems [31][32][33].

Running these technologies at scale requires sophisticated optimization tools. Meta developed Zoomer to fine-tune GPU workloads, producing tens of thousands of profiling reports each day. The company also created Ax, an open source platform that uses machine learning to optimize AI model performance [34][35]. Together, these tools help Meta maintain AI systems serving billions of users worldwide.

The financial commitment backing these initiatives is substantial. Meta expected to spend between $70-72 billion on capital expenditures in 2025—roughly $30 billion more than the previous year [36]. This investment funds the infrastructure needed to train, deploy, and scale the company's growing collection of AI technologies.

Physical infrastructure is expanding to match these ambitions. Meta is building a gigawatt-scale data center and plans to bring the one-gigawatt Prometheus super cluster online in 2026 at its New Albany, Ohio location [37]. These facilities will provide the computational power necessary for large-scale AI development and support Meta's ecosystem of technology partners.

NVIDIA NIM and NeMo Production Optimization Frameworks

NVIDIA offers comprehensive enterprise AI solutions through NVIDIA NIM (Inference Microservices), a suite of containerized services designed to revolutionize AI model deployment across cloud, data center, and edge environments [38]. These microservices are available on major cloud marketplaces including AWS, Google Cloud, Microsoft Azure, and Oracle Cloud, providing flexible multi-cloud deployment options.

The company's Omniverse platform delivers APIs, SDKs, and services that let developers integrate OpenUSD, NVIDIA RTX rendering, and generative physical AI into existing software tools [39]. OpenUSD—an open-source framework originally developed by Pixar—enables interchange and collaboration within 3D scenes, allowing teams to work together in real-time while maintaining a unified scene description. NVIDIA has expanded Omniverse with generative AI models for physical AI applications spanning robotics, autonomous vehicles, and vision systems.

Leading companies including Accenture, Siemens, Microsoft, and Ansys have adopted Omniverse to accelerate industrial AI development. NVIDIA's market dominance is evident in its commanding 86% AI GPU and 90% data center GPU market shares in 2025 [40].

NVIDIA NeMo Framework 2.0 provides a flexible, Python-based framework that integrates into existing developer workflows with capabilities like code completion, type checking, and programmatic extensions. This framework covers the complete AI development lifecycle through modular microservices: NeMo Curator for data preparation, NeMo Customizer for model fine-tuning, NeMo Evaluator for quality assessment, and NVIDIA NIM for deployment [41].

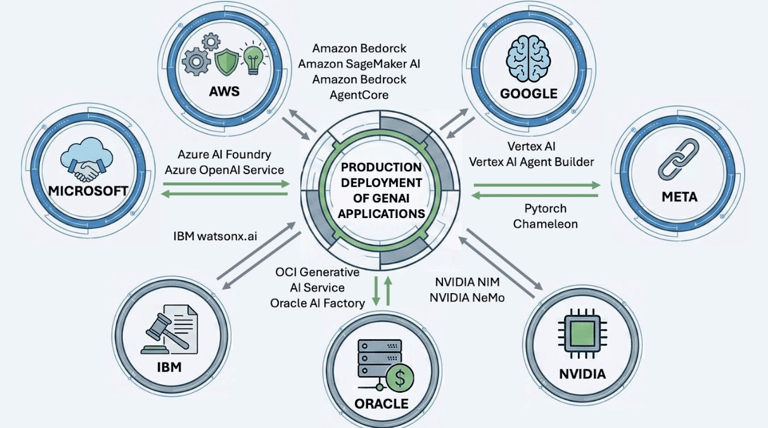

Fig. 2 shows the converging ecosystem of major AI companies addressing production Generative AI deployment challenges. These companies contribute complementary platforms and services spanning foundation models, development frameworks, and deployment infrastructure.

Figure 2: Major technology providers supporting production generative AI deployment

Strategic Roadmaps: Accelerating Production Deployment

The enterprise AI landscape is rapidly evolving as major technology companies shift from experimental pilots to production-scale deployments. Each company pursues distinct but complementary strategies addressing fundamental adoption barriers while positioning for the projected trillion-dollar market by 2035-2040.

AWS leads infrastructure investment with $125 billion in capital expenditures and introduced Amazon Bedrock AgentCore in July 2025 [42][43], addressing the critical gap where developers previously spent months building foundational infrastructure for session management and observability. Their "Move to AI" modernization pathway provides structured guidance for transforming application portfolios, directly tackling integration complexity that affects most organizations.

Microsoft has committed $80 billion to AI-optimized data centers through 2028 [44], addressing capacity constraints that historically limited enterprise deployments. Azure AI Foundry now supports over 70,000 customers and processes 100 trillion tokens quarterly [45], demonstrating the massive infrastructure required for enterprise-wide AI adoption.

Google emphasizes unified multimodal architecture under single endpoints, a contrast to competitors' separate service approaches. Their Agent Development Kit has been downloaded over 8 million times, and by donating the Agent2Agent protocol to the Linux Foundation [46], Google demonstrates commitment to open standards that reduce integration complexity.

The economic challenges of AI adoption, where meaningful ROI remains elusive for most, are being addressed through flexible pricing models. AWS introduced new service tiers including a Flex option for cost-effective model evaluations. Microsoft offers provisioned throughput, pay-as-you-go, and batch processing options to match workloads with cost structures [47][48]. Google provides free credits for new customers, while IBM offers promotional subscription pricing to make enterprise AI more accessible during critical adoption phases.

All major companies now converge on agentic AI as the next frontier, with McKinsey research showing 62% of organizations already experimenting with AI agents [49]. These companies proactively address regulatory requirements—IBM achieved FedRAMP authorization for federal deployments while supporting validation against 12 compliance frameworks including the EU AI Act [50]. Google updated its AI Principles to emphasize responsible development with appropriate human oversight, while Microsoft grounds its approach in identifying and mitigating potential harms.

This shift from technology experimentation to business transformation represents a fundamental change in enterprise AI adoption. With global AI spending projected to have reached $1.5 trillion in 2025 and enterprise adoption reaching 78% [51][52], the window for establishing market leadership in production AI deployment is rapidly narrowing.

At the Centre for GenAIOps, we've observed that successful organizations follow our three-phase Operating Model: Strategy (curate, learn, define, discover), Proof of Concept (build, refine, test, validate), and Productionisation (integrate, scale, sustain, monitor). This structured approach, combined with our Generative AI Manifesto principles—leading with purpose, designing with transparency, ensuring human oversight, and promoting governance—enables organizations to move from the current 7% production deployment rate toward sustainable, enterprise-scale AI adoption.

The major technology providers examined in this article—AWS, Google Cloud, Microsoft, IBM, Oracle, Meta, and NVIDIA—each address different aspects of this framework. Each company's approach reflects their core competencies and market positioning, creating a diverse ecosystem where enterprises can select solutions aligned with their specific requirements and strategic objectives. The ultimate winners will be those who demonstrate clear business value while addressing the fundamental barriers preventing widespread AI adoption across enterprise environments.

Disclaimer: The views and opinions expressed in this blog are my own and do not necessarily reflect the official policy or position of Amazon Web Services (AWS) or Amazon.

References

https://workos.com/blog/why-most-enterprise-ai-projects-fail-patterns-that-work

https://www.larridin.com/blog/state-of-enterprise-ai-in-2025

https://aws.amazon.com/solutions/case-studies/jabil-manufacturing-transformation-generative-ai/

https://aws.amazon.com/solutions/case-studies/asapp-case-study/

https://aws.amazon.com/solutions/case-studies/innovators/writer/

https://services.google.com/fh/files/misc/agentic-ai-tam-analysis.pdf

https://docs.cloud.google.com/vertex-ai/generative-ai/docs/start/get-started-with-gemini-3

https://ppc.land/google-cloud-releases-comprehensive-agentic-ai-framework-guideline/

https://www.ibm.com/architectures/patterns/genai-ibm-platform

https://blogs.oracle.com/ai-and-datascience/future-generative-ai-what-enterprises-need-to-know

https://blogs.oracle.com/jobsatoracle/oracle-ai-world-2025-accelerating-the-ai-age

https://about.fb.com/news/2025/11/new-sam-models-detect-objects-create-3d-reconstructions/

https://www.reuters.com/business/metas-profit-hit-by-16-billion-one-time-tax-charge-2025-10-29/

https://www.nvidia.com/en-us/ai-data-science/products/nemo/get-started/

https://www.globaldatacenterhub.com/p/amazon-q3-2025-the-125b-ai-infrastructure

https://azure.microsoft.com/en-us/blog/azure-ai-foundry-your-ai-app-and-agent-factory/

https://azure.microsoft.com/en-us/blog/maximize-your-roi-for-azure-openai/

https://www.mckinsey.com/capabilities/quantumblack/our-insights/the-state-of-ai